Highly Available Kubernetes Cluster

Kubernetes… everyone’s favourite container orchestration. Love or hate it, it is fast becoming the de-facto solution for managing containers at scale. This blog post will go through step by step on how we can create a kubernetes (k8s) cluster on a local machine from the first command to all nodes being ready in about 12 minutes. This post assumes the readers to have basic knowledge of k8s. If you haven’t heard of k8s, you should read this.

Before we jump into the guide, I will outline the tools and the kind of k8s cluster we are

going to create. We know that creating a k8s cluster is complex. kubeadm has made the process

easier but there are still steps that feel like a maze. Do you not need docker with k8s

anymore? What are the various container runtimes? Why are there so many choices for pod

networking? What to do with ‘cgroup driver’? This blog post intends to go through each step and be a

complete guide into creating a highly available kubernetes cluster.

The plan is to create a series of blog posts to create a production-grade k8s cluster. The first step is to create an HA cluster. This is important because we do not want to have any single point of failure in our architecture. At the end of this post, we will deploy a vanilla nginx web server to demonstrate that we can successfully access its pod with the correct port number.

Second part of the series is going to be deploying more complex applications. A popular architecture for a web application is having a single-page application (SPA) frontend talking to an api backend on a specific port. We will see how we can configure both k8s and our applications to achieve this. We will throw in observability which includes prometheus, jaeger, and loki as well as grafana for visualisation.

The final part is going to be about ingress controller, so we can access our application in local

machine from the internet. We will need a domain name for this, and we will configure the DNS to

point to our k8s cluster and see how our virtual IP (floating IP) from keepalived plays into this.

To make our cluster more production-grade, we also look into backup and restore etcd database.

This guide on the first part does not come out of vacuum. I heavily referred to this video https://youtu.be/SueeqeioyKY and highly available (HA) section of k8s at https://github.com/kubernetes/kubeadm/blob/main/docs/ha-considerations.md#options-for-software-load-balancing. Full list of reference is at https://github.com/gmhafiz/k8s-ha#reference.

Why is k8s short for kubernetes? There are 8 letters in between of ‘k’ and ’s’ 😎

If you do not want to read the rest, tldr:

git clone https://github.com/gmhafiz/k8s-ha

cd k8s-ha

make install

make up

make cluster

This is part of a series of blog posts including:

- Part 1: Highly Available Kubernetes Cluster - with vagrant and libvirt

- Part 2: Deploy Complex Web App - Create a Vue3 frontend, Go API, one postgres database

-

Part 3: (Coming!) Ingress Controller - Backup and Restore

etcd, access local k8s cluster from the internet

Preface

There are many third party tools you can use to create a local k8s cluster locally such as

minikube, kind, k3s,

k0s among others. Minikube

only create a single node, so it is hard to show how we can create a highly available cluster.

Kind can create a multi-node cluster which is great, and it is a certified installer, just like

k3s and k0s which I have not played around with yet. The method I am choosing is kubeadm

because it is easy-ish to use with the right amount of magic and transparency. To provision the nodes (virtual machines) I use

vagrant with libvirt. Provisioning all eight nodes is fast. Subtracting the time to download the

images, it takes about a minute to get all VMs up.

To create a k8s cluster, there are a series of commands that need to be run on some, or all

nodes. We could copy and paste each command to relevant VMs but there is a simpler way. Ansible

allows us to declare the desired state that we want to be executed on each VM. With a single command, it

can execute the same command to the nodes we tell it to.

If you want to administer the cluster from a local machine, you can install kubectl. Once

the cluster is installed, the config file can be copied into your $HOME/.kube/config.

Tools we are going to use:

- vagrant

- kvm / libvirt

- ansible

- Highly Available architecture with a floating IP

K8s spec:

- v1.26

- kubeadm

- Debian 11 Bullseye

- CRI: containerd

- CNI: calico

vagrant is our VM management workflow. It uses a declarative

Vagrantfile (in Ruby language) that defines the desired states of our VMs. Creating the VMs is just

a simple vagrant up command. There is a caveat in the way networking is done though. The IP

address we are concerned with is at eth1, not eth0 found in aws ec2 or digitalocean when

provisioned using terraform. I am not sure why this is the case. It may have something to do with

the way vagrantbox is packaged. You can absolutely skip this tool and use libvirt directly to

create VMs, but I like having it in a file where it can be versioned.

kvm or ‘Kernel-Based virtual machine’ is a module in Linux kernel to allow the kernel to function as a hypervisor - basically allow us to run hardware-accelerated virtual machines.

libvirt is an interface for all virtualisation technology. It is the one that manages VMs like kvm,

qemu and others. It is essentially the one that creates and destroys VMs. It is possible to use

virtualbox instead by adding --provider=virtualbox to vagrant up command but libvirt makes

faster VMs than virtualbox.

ansible can send shell commands to each of our VMs simultaneously as long as it can SSH into each of them. So, we save on typing and copy-pasting. The commands are defined in declarative way into files called ‘playbooks’, just like the ‘Vagrantfile`. Not all the playbooks are idempotent yet which is one thing I would like to fix.

virtual IP from keepalived is used on top of the two haproxy load balancers. In case one

load balancer goes down, our floating IP will point to our backup load balancer. Since we are

installing the cluster manually, we need to create such floating IP. In managed solution like

eks, gke, or aks,

they will provide an external load balancer for you if you have chosen an HA setup.

k8s latest version as of now (2022-12-11) is version 1.26.0 Biggest change in recent years is

removal of dockershim in v1.24 which means it is advisable to use containerd directly or use other

container runtime like CRI-O.

kubeadm is an easy-to-use tool to create a k8s cluster. The official guide is pretty shallow which is why this blog series was created. It simplifies the creation of a k8s cluster, and you do not have to deal with tedious stuff like certificate creation, SSL stuff, firewall settings, configuring the network, creating etcd database and many others. Other choices to create a k8s cluster has been mentioned above, k3s, kind, minikube, and of course Kelsey Hightower’s Kubernetes The Hard Way

Debian 11 Bullseye is the distro of choice. I like stable and Long-term Support (LTS) distro like Debian and Ubuntu because you get security updates for ~5 years. There’s nothing wrong with choosing Ubuntu but Debian is seen to be more stable and would have a smaller surface of attack. Alternatives include distros like Rocky Linux, and AlmaLinux that has a ten-year security support. There are options for immutable OS like Talos, and self-updating like Fedora CoreOS too. Ultimately, the base operating system should not matter much.

containerd is the container runtime interface (CRI) of choice. It is responsible with creating

and destroying pods (think of a pod as a container for Docker is for k8s). It is simple and works

reliably. Another choice as mentioned before is CRI-O. One

thing that needs to be checked is the compatibility between the installed

containerd and kubelet. For example, trying k8s version 1.25.4 was fine until

I tried with k8s version 1.26 - in which the containerd package that comes with

debian is too old and is unsupported.

calico is one of many choices for a container network interface (CNI). It is responsible for allocating pod IP addresses. During cluster initialisation, we need to supply ‘Classless Inter-Domain Routing’ (CIDR) for the pods which differ from one CNI to another. Other choices are Flannel, weave net, cilium, etc. There isn’t any particular specific reason why I am using calico other than it is the most popular.

As you can see, we use five tools not directly related to k8s (vagrant/libvirt/ansible/haproxy/keepalived).

While the fewer tools are better to help reduce learning friction (and fewer things to break!),

these tools help us to achieve what we want. Vagrant isn’t strictly needed, but it is good to have

your node topology versioned. If you had gone for a managed HA k8s provider, then you do not need

both haproxy and keepalived. If you use cloud VMs, then you do not need both vagrant and

libvirt since you no longer need to create VMs or have bare-metal machines. Ansible is still

going to be greatly beneficial in either cases.

What is not covered

This post is long, but it does not cover everything you need to know about creating an HA k8s cluster.

- I only tested creating VMs in a single machine, not multiple physical hardware.

- Not about managed k8s like eks, gke, or aks that includes a load balancer.

- Software stuff: deploying apps, secrets management, ingress are in the next series.

Topology

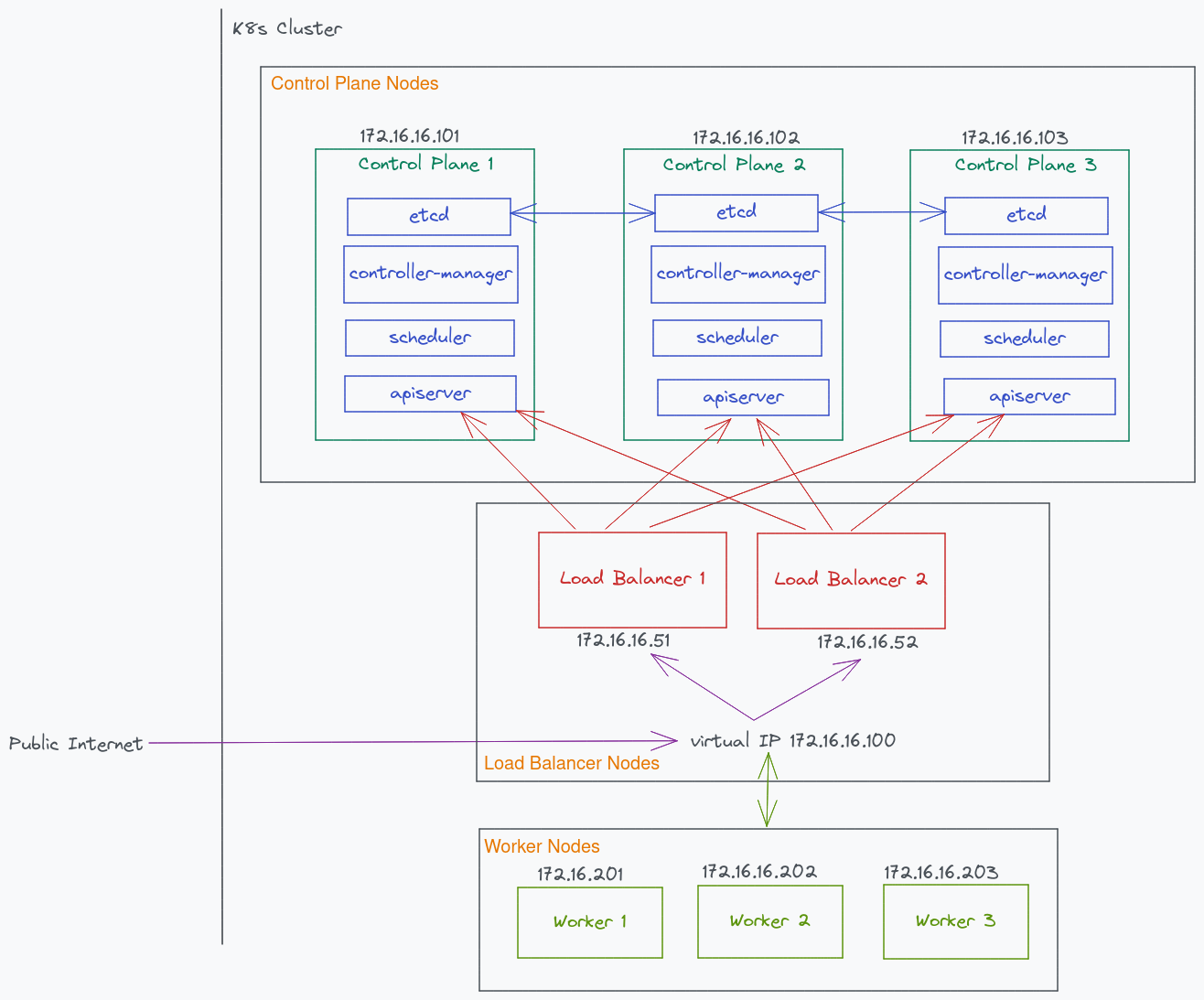

Highly available k8s cluster means that there isn’t any single point of failure. Before we take a look at our architecture, consider the following topology from official docs,

There are three control plane nodes which ensures that if one of the nodes go down, there are other two control plane nodes to take care of all k8s operations. An odd number is chosen to make leader election (of the raft consensus algorithm) easier.

The same goes with worker nodes. There are at least three nodes, and they have an odd number, just like the control plane. However, that page does not elaborate on the load balancer. If that load balancer node fails, outside traffic cannot access anything on the cluster. For that, we need to refer to https://github.com/kubernetes/kubeadm/blob/main/docs/ha-considerations.md#options-for-software-load-balancing.

In our chosen architecture, instead of a single load balancer, we create three of them - just like control plane nodes.

There are a number of options for a load balancer including nginx, traefik, or even an

API gateway. I chose haproxy because it is not only battle-tested, but it is small and consumes

minimal resources.

As a result, this is the topology I have chosen

Etcd is still hosted on each control plane node like before. But now, there are two load balancers

that proxies requests to one of the three apiservers contained in each control plane. If one load

balancer goes down, it will switch over to the second load balancer. Notice that I used a virtual IP

(floating IP) as the entry point created from keepalived. This floating IP will point to which

ever healthy node (Master), and the other node (Backup) serves as the fail-over node. Finally,

each worker node communicates with the virtual IP.

The specs of each node is as follows. 2GB Ram and 2 CPU for each k8s node is the minimum

required

for k8s. Haproxy and keepalived are pretty light so I only give 512MB and 1 CPU to each load

balancer nodes. Haproxy website gives a recommendation

on server spec if you are interested in customising this.

| Role | Host Name | IP | OS | RAM | CPU |

|---|---|---|---|---|---|

| Load Balancer | loadbalancer1 | 172.16.16.51 | Debian 11 Bullseye | 512MB | 1 |

| Load Balancer | loadbalancer1 | 172.16.16.52 | Debian 11 Bullseye | 512MB | 1 |

| Control Plane | kcontrolplane1 | 172.16.16.101 | Debian 11 Bullseye | 2G | 2 |

| Control Plane | kcontrolplane2 | 172.16.16.102 | Debian 11 Bullseye | 2G | 2 |

| Control Plane | kcontrolplane3 | 172.16.16.103 | Debian 11 Bullseye | 2G | 2 |

| Worker | kworker1 | 172.16.16.201 | Debian 11 Bullseye | 2G | 2 |

| Worker | kworker2 | 172.16.16.202 | Debian 11 Bullseye | 2G | 2 |

| Worker | kworker3 | 172.16.16.203 | Debian 11 Bullseye | 2G | 2 |

Preparation

This guide assumes you haven’t installed any of the tools yet. If you have, skip to Create K8s Cluster section.

Before going any further, we need to check if your CPU support hardware-accelerated virtualization

for the purpose of creating VMs. On a debian-based distro, install libvirt.

sudo apt update && sudo apt upgrade

sudo apt install bridge-utils qemu-kvm virtinst libvirt-dev libvirt-daemon virt-manager

To check if your CPU supports hardware-accelerated virtualization, run the kvm-ok command. Success

message looks like this

$ kvm-ok

INFO: /dev/kvm exists

KVM acceleration can be used

Next, install vagrant

wget -O- https://apt.releases.hashicorp.com/gpg | gpg --dearmor | sudo tee /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install vagrant

vagrant plugin install vagrant-libvirt vagrant-disksize vagrant-vbguest

The vagrant-libvirt plugin is key to allow us to create VMs using libvirt. It is possible to

use virtualbox as well, so I included that vagrant-vbguest plugin too,

To manage your k8s cluster on a local machine, you need to install kubectl. It is highly recommended

that you use the same version for both kubectl and kubelet. So when you are upgrading k8s in the

VMs, upgrade kubectl on your local machine with the same version too.

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

Clone the repository with

git clone https://github.com/gmhafiz/k8s-ha

Finally, create a public-private key pair exclusive for accessing the VMs using SSH keys. Accessing this way should be favoured over accessing using username/password nowadays.

ssh-keygen -t rsa -b 4096 -f ansible/vagrant

chmod 600 ansible/vagrant

chmod 644 ansible/vagrant.pub

It is possible to use your own public key if you have it. Simple change all occurrence in

Vagrantfile, to the path of your ~/.ssh/id_rsa.pub. For example,

ssh_pub_key = File.readlines("./ansible/vagrant.pub").first.strip

# to

ssh_pub_key = File.readlines("~/.ssh/id_rsa.pub").first.strip

Create K8s Cluster

Provision VMs

Now the fun part begins. To provision the VMs, simply run

vagrant up

link to file https://github.com/gmhafiz/k8s-ha/blob/master/Vagrantfile

If you are running for the first time, it will download a linux image called vagrantbox. If you

look into Vagrantfile, we use Debian 11 Bullseye with box version 11.20220912.1. Note that the sudo

password is set to kubeadmin which is set in bootstrap.sh file.

VAGRANT_BOX = "debian/bullseye64"

VAGRANT_BOX_VERSION = "11.20220912.1"

You can also use Ubuntu 20.04, but I haven’t managed to get Ubuntu 22.04 to work.

VAGRANT_BOX = "generic/ubuntu2004"

VAGRANT_BOX_VERSION = "3.3.0"

We also define how many CPUs and how much RAM

CPUS_LB_NODE = 1

CPUS_CONTROL_PLANE_NODE = 2

CPUS_WORKER_NODE = 2

MEMORY_LB_NODE = 512

MEMORY_CONTROL_PLANE_NODE = 2048

MEMORY_WORKER_NODE = 2048

For a minimum HA,

LOAD_BALANCER_COUNT = 2

CONTROL_PLANE_COUNT = 3

WORKER_COUNT = 3

K8s Cluster

Ansible is our choice to send commands to our selected nodes. Change to folder,

cd ansible

Firstly, we take a look at provisioning our VMs. We define the details and grouping of the VMs

using a hosts file.

[load_balancer]

lb1 ansible_host=172.16.16.51 new_hostname=loadbalancer1

lb2 ansible_host=172.16.16.52 new_hostname=loadbalancer2

[control_plane]

control1 ansible_host=172.16.16.101 new_hostname=kcontrolplane1

control2 ansible_host=172.16.16.102 new_hostname=kcontrolplane2

control3 ansible_host=172.16.16.103 new_hostname=kcontrolplane3

[workers]

worker1 ansible_host=172.16.16.201 new_hostname=kworker1

worker2 ansible_host=172.16.16.202 new_hostname=kworker2

worker3 ansible_host=172.16.16.203 new_hostname=kworker3

[all:vars]

ansible_python_interpreter=/usr/bin/python3

By default, this file is located in the same folder as all playbooks. We can modify this if you want

by using an -i flag and specifying the location. We have set several variables in vars.yaml, so

we refer to it by using the --extra-vars option.

At this stage, you can create the HA cluster with one command. However, it is good to go through each step of the way and explain what happens and the decisions I have made.

# yolo and run all playbooks

ansible-playbook -u root --key-file "vagrant" main.yaml --extra-vars "@vars.yaml"

If you decide against the single command and wants to learn step by step (there are a total of 7 playbooks), strap your seat belt because we are going to take a long and exciting ride!

…

If you have a team member that you want to give access to the VMs, this is an easy way to create a user and copy the user’s public key to all VMs.

# 01-initial.yaml

- hosts: all

become: yes

tasks:

- name: create a user

user: name=kubeadmin append=yes state=present groups=sudo createhome=yes shell=/bin/bash password={{ PASSWORD_KUBEADMIN | password_hash('sha512') }}

- name: set up authorized keys for the user

authorized_key: user=kubeadmin key="{{item}}"

with_file:

- ~/.ssh/id_rsa.pub

The ansible playbook creates a user named kubeadmin and copies the public key to all VMs! To add

more users, simply copy and paste both tasks with a different user and public key path.

For now, this user only belongs to the group sudo. Just make sure that this particular user is allowed sudo access! Notice that a password is also set against this user.

I find myself typing a lot of kubectl command, so I like to alias it to something shorter like

k, for example k get no is short for kubectl get nodes.

- hosts: control_plane

become: yes

tasks:

- name: Add kubectl alias for user

lineinfile:

path=/home/kubeadmin/.bashrc

line="alias k='kubectl'"

owner=kubeadmin

regexp='^alias k='kubectl'$'

state=present

insertafter=EOF

create=True

- name: Source .bashrc

shell: "source /home/kubeadmin/.bashrc"

args:

executable: /bin/bash

Run the playbook with the following command. Make sure you are in the ./ansible directory.

# step 1

ansible-playbook -u root --key-file "vagrant" 01-initial.yaml --extra-vars "@vars.yaml"

Next, we need to install essential packages to the nodes.

ansible-playbook -u root --key-file "vagrant" 02-packages.yaml

We always want to keep our VMs up to date. So we run the necessary apt commands.

Thanks to ansible, we can install both keepalived and haproxy on each of the two load balancer

nodes, as well as apt-transport-https to all eight nodes in a single command.

# 02-packages.yaml

- hosts: all

become: yes

tasks:

- name: update repo

apt: update_cache=yes force_apt_get=yes cache_valid_time=3600

- name: upgrade packages

apt: upgrade=dist force_apt_get=yes

- name: packages

apt:

name:

- apt-transport-https

state: present

update_cache: true

- hosts: load_balancer

become: yes

tasks:

- name: packages

apt:

name:

- keepalived

- haproxy

state: present

update_cache: true

Next is installing the load balancer, haproxy.

Several things happen here. We set the config file at /etc/haproxy/haproxy.cfg. It has four sections,

global, defaults, frontend, and backend. Comprehensive explanation is detailed in haproxy’s website

but the most important sections for us are both the frontend and backend. The frontend section

is the entry point and notice that the port is 6443 which is the same as the default apiserver

port. Since these load balancers are installed in a separate node, this is ok. If you install the

load balancer in the same nodes as control planes, you need to change this port into something else.

# 03-lb.yaml

- hosts: load_balancer

become: yes

tasks:

- name: haproxy

copy:

dest: "/etc/haproxy/haproxy.cfg"

content: |

global

maxconn 50000

log /dev/log local0

user haproxy

group haproxy

defaults

log global

timeout connect 10s

timeout client 30s

timeout server 30s

frontend kubernetes-frontend

bind *:6443

mode tcp

option tcplog

default_backend kubernetes-backend

backend kubernetes-backend

option httpchk GET /healthz

http-check expect status 200

mode tcp

option check-ssl

balance roundrobin

server kcontrolplane1 {{ IP_HOST_CP1 }}:6443 check

server kcontrolplane2 {{ IP_HOST_CP2 }}:6443 check

server kcontrolplane3 {{ IP_HOST_CP3 }}:6443 check

The mode tcp is telling us that this is a Layer 4 (transport) of OSI model load balancer. We use a

simple round-robin strategy to distribute the requests among the three control planes. Note that the

IP addresses are in double moustache variables. The port needs to be 6443

because we have no intention to change this default port.

Next, we are pinging /healthz which is the default endpoint of kubernetes’ ‘healthiness’ and

simply check for 200 HTTP status.

# vars.yaml

...

IP_HOST_CP1: 172.16.16.101

IP_HOST_CP2: 172.16.16.102

IP_HOST_CP3: 172.16.16.103

...

From haproxy website, there is a guide to calculate how many connections you could set based on available RAM. The timeouts are obviously based on your business case.

With this config file, we can verify if it is valid with the command

haproxy -f /etc/haproxy/haproxy.cfg -c

and it should return

Configuration file is valid

Next we take a look at keepalived configuration, specifically the vrrp_script section. Firstly,

we define our virtual IP at 172.16.16.100 under the virtual_ipaddress section.

There is a path to a script that can tell the ‘aliveness’ of haproxy. This is defined under

track_script section which points to our /etc/keepalived/check_apiserver.sh script. It is going

to check the health of this load balancer every 3 seconds. If it fails 5 times , it

will mark this node to be down. When it does, it will bring the priority from 100 down by 2

as defined in vrrp_instance section. This means the BACKUP load balancer will

have a higher priority (100 > 98) and that backup becomes the master. Meanwhile,

if the node returns ok twice (set by rise = 2), it will bring the priority up by two.

vrrp_script check_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 3

timeout 10

fall 5

rise 2

weight 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 1

priority 100

advert_int 5

authentication {

auth_type PASS

auth_pass {{ PASSWORD_KEEPALIVED }}

}

virtual_ipaddress {

172.16.16.100

}

track_script {

check_apiserver

}

}

Looking at the vrrp_instance section of keepalived, we have a state called BACKUP, the other

being a MASTER. Surprisingly, both load balancer nodes can have the exact same configuration file.

If they do, they will randomly choose one node to become a MASTER. However,

we aren’t going to do that. We will explicitly set distinct config file for each load balancer.

The interface has to be eth1 as explained before. So always check with ip a command.

This ‘aliveness’ script is adapted from k8s website.

What we needed to modify a couple of things. {{ VIRTUAL_IP }} is going to be 172.16.16.100. Then

the port of haproxy is defined by {{ K8S_API_SERVER_PORT }} and it is going to be 6443. If you

decide that you want to install both haproxy and keepalived on each control plane nodes, then

port number will clash with k8s’s apiserver default port. So in the haproxy config above, you must

change the port 6443 to something else. Since we have the two load balancers on a separate

nodes than control plane, we do not have to worry about this.

#!/bin/sh

errorExit() {

echo "*** $*" 1>&2

exit 1

}

curl --silent --max-time 2 --insecure https://localhost:{{ K8S_API_SERVER_PORT }}/ -o /dev/null \

|| errorExit "Error GET https://localhost:{{ K8S_API_SERVER_PORT }}/"

if ip addr | grep -q {{ VIRTUAL_IP }}; then

curl --silent --max-time 2 --insecure https://{{ VIRTUAL_IP }}:{{ K8S_API_SERVER_PORT }}/ -o /dev/null \

|| errorExit "Error GET https://{{ VIRTUAL_IP }}:{{ K8S_API_SERVER_PORT }}/"

fi

The two keepalived communicates using basic auth. And I generate its password with

openssl rand -base64 32

The other two aren’t so important. For virtual_router_id, we can just pick any arbitrary number

from 0 to 255 and make it identical because both are members of the same cluster. Each node advertises

at a 5 seconds interval with advert_int.

Run with

ansible-playbook -u root --key-file "vagrant" 03-lb.yaml --extra-vars "@vars.yaml"

At the moment, we have no connection to any of the control plane k8s because we have not initialised

it yet. However, we can check if keepalived is working using a netcat command.

nc -v 172.16.16.100 6443

Now installing k8s itself involves several complex steps. Since we provisioned the VMs

using vagrant, there is a caveat that needs to be taken care of. The IP address we are

concerned with is actually at interface eth1 instead of eth0. This is different from when I played

around with terraform to provision VMs in AWS ec2 instances because its

default interface is at eth0. If you are using bare metal, the interface again could be different.

So always check by first SSH-ing and run the ip a command.

ssh -i ./vagrant vagrant@172.16.16.101

ip a

It will look something like this

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:e7:bf:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.121.190/24 brd 192.168.121.255 scope global dynamic eth0

valid_lft 2492sec preferred_lft 2492sec

inet6 fe80::5054:ff:fee7:bf01/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:a4:cc:84 brd ff:ff:ff:ff:ff:ff

--> inet 172.16.16.51/24 brd 172.16.16.255 scope global eth1 <-- the IP address we defined is at eth1

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fea4:cc84/64 scope link

valid_lft forever preferred_lft forever

Once we’ve installed keepalived, we will be able to see the virtual IP on one of the two load

balancer nodes.

kubeadmin@loadbalancer2:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:52:d1:1d brd ff:ff:ff:ff:ff:ff

inet 192.168.121.173/24 brd 192.168.121.255 scope global dynamic eth0

valid_lft 3416sec preferred_lft 3416sec

inet6 fe80::5054:ff:fe52:d11d/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:1d:3f:8c brd ff:ff:ff:ff:ff:ff

inet 172.16.16.52/24 brd 172.16.16.255 scope global eth1

valid_lft forever preferred_lft forever

--> inet 172.16.16.100/32 scope global eth1 <-- our floating IP appears

valid_lft forever preferred_lft forever

inet6 fe80::5054:ff:fe1d:3f8c/64 scope link

valid_lft forever preferred_lft forever

The floating IP is assigned to loadbalancer1 because it has a higher initial priority.

It can take some time for this floating IP to show up, so hopefully it is ready before we initialise

k8s. If in doubt, check using journalctl command on each load balancer node.

sudo journalctl -flu keepalived

Moving forward with k8s cluster installation, it has several server requirements. Swap must be

disabled because k8s cannot function with it. We remove its /etc/fstab entry too so that it will not be

switched on at reboot.

# 04-k8s.yaml

- hosts: control_plane,workers

become: yes

tasks:

- name: Disable swap

become: yes

shell: swapoff -a

- name: Disable SWAP in fstab since kubernetes can't work with swap enabled

replace:

path: /etc/fstab

regexp: '^([^#].*?\sswap\s+sw\s+.*)$'

replace: '# \1'

...

kubeadm will manage k8s firewall configuration so we disable them

...

- name: Disable firewall

shell: |

systemctl disable --now ufw >/dev/null 2>&1

...

K8s requires a bridged network and must forward ipv4 traffic. So we write the config to the relevant files.

...

- name: bridge network

copy:

dest: "/etc/modules-load.d/containerd.conf"

content: |

overlay

br_netfilter

- name: forward ipv4 traffic

copy:

dest: "/etc/sysctl.d/kubernetes.conf"

content: |

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

- name: apply bridge network

become: yes

shell: modprobe overlay && modprobe br_netfilter && sysctl --system

...

We could reboot the VMs to apply these configurations but there is a quicker way. modprobe

adds the modules to the kernel, and sysctl command applies the ipv4 forwarding

without a reboot.

The next task is to install a container runtime. This runtime is the one that starts and stops

containers, among other things. The runtime was abstracted away from kubernetes and container runtime

interface (CRI) was created in 2016. There are a couple of well known CRIs which includes

containerd and CRI-O. Docker shim support was removed from k8s v1.24

but docker uses containerd underneath anyway.

As mentioned before, we needed an up to date containerd so kubelet in version

1.26 can work with it.

- name: Apt-key for containerd.io [1/2]

become: yes

shell: |

curl -fsSL https://download.docker.com/linux/debian/gpg

- name: Add containerd.io repository [2/2]

become: yes

shell: |

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

Thanks (but no thanks) to the way containerd is packaged, we need to make an adjustment to the

config file it generated. In its config file, we have to tell cgroup that we will use systemd. If we

don’t, k8s will use cgroupfs instead, and it will no longer match with kubectl which uses systemd.

Thus, we need to change the line SystemdCgroup = false to true for the section [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options].

For that, we use both grep and sed.

- name: Tell containerd to use systemd

shell: |

mkdir -p /etc/containerd && \

containerd config default > /etc/containerd/config.toml && \

sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/g' /etc/containerd/config.toml

The desired state of that config.toml is,

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

...

--> [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] <-- for this

--> SystemdCgroup = true <-- desired value

Next, we install kubelet, kubeadm, and kubectl.

- name: add Kubernetes apt-key

apt_key:

url: https://packages.cloud.google.com/apt/doc/apt-key.gpg

state: present

- name: add Kubernetes' APT repository

apt_repository:

repo: deb https://apt.kubernetes.io/ kubernetes-xenial main

state: present

filename: 'kubernetes'

- name: install kubelet

apt:

name: kubelet={{ K8S_VERSION }}

state: present

- name: install kubeadm

apt:

name: kubeadm={{ K8S_VERSION }}

state: present

- name: install kubectl

apt:

name: kubectl={{ K8S_VERSION }}

state: present

The variable K8S_VERSION is defined in the vars.yaml file. Lastly, we freeze all packages so

that we do not accidentally upgrade any of the versions.

- name: Hold versions

shell: apt-mark hold kubeadm={{ K8S_VERSION }} kubelet={{ K8S_VERSION }} kubectl={{ K8S_VERSION }}

The reason why we freeze its version is we should do any k8s upgrade manually. We need to read release notes carefully. We do not want to bring down our cluster and there is an order into which program should be upgraded. Finally, depending on which version your tool is, there is a specific range of version you can upgrade to. Look over at k8s’s skew policy.

Ok. Run this playbook with

ansible-playbook -u root --key-file "vagrant" 04-k8s.yaml --extra-vars "@vars.yaml"

Our next step is to initialise the control plane nodes. We already have set up haproxy and

keepalived. The floating IP 172.16.16.100 is going to be our entry point.

So the playbook has a declaration on one node, in this case on node control1, but it doesn’t matter which as long as it is in one of the control plane nodes.

# 05-control-plane.yaml

- hosts: control1

become: yes

tasks:

- name: initialise the cluster

shell: kubeadm init --control-plane-endpoint="{{ VIRTUAL_IP }}:{{ K8S_API_SERVER_PORT }}" \

--upload-certs \

--pod-network-cidr=192.168.0.0/16 \

--apiserver-advertise-address=172.16.16.101

register: cluster_initialized

We use the kubeadm init command, and we tell where the control plane entry point is. The port number

is the default 6443. Best practice is to use Classless Inter-Domain Routing (CIDR) for

--control-plane-endpoint, for example --control-plane-endpoint="myk8s.local:6443".

But you need to add myk8s.local to your /etc/hosts file in all control plane nodes first.

--upload-certs uploads temporary certificates to a Secret object in the cluster which will

expire in two hours. This is enough time to join other control planes and worker nodes. Say in the

future you want to add more worker nodes to this cluster, we need to generate new a certificate (and

token).

The pod network CIDR, is required to tell the range of pod subnet

IPs. This CIDR depends on the Container Network Interface (CNI) that we choose. In our case, we

choose Calico which requires the CIDR to be 192.168.0.0/16. If you had chosen Flannel for example,

it will be 10.244.0.0/16 instead. For a list of some CNI alternatives, look over at https://kubernetes.io/docs/concepts/cluster-administration/addons/#networking-and-network-policy.

Finally, a special argument is needed because we have used vagrant and libvirt. We need to tell

where this command is coming from by telling this control1 node IP address with

--apiserver-advertise-address option. This is a special case specific to vagrant. If you were

using other types of nodes such as ec2 or digital ocean for example, this option might not be needed.

The kubeadm init step will take some time to complete, almost two minutes for me. If for some

reason this step fails, and we want to retry, then we need to reset both the cluster and firewall.

kubeadm reset --force && rm -rf .kube/ && rm -rf /etc/kubernetes/ && rm -rf /var/lib/kubelet/ \

&& rm -rf /var/lib/etcd && iptables -F && iptables -t nat -F && \

iptables -t mangle -F && iptables -X

# or using ansible

ansible-playbook -u root --key-file "vagrant" XX-kubeadm_reset.yaml

If everything goes well, we want to be able to use the kubectl command. For that to work, we can either

export KUBECONFIG,set the path to /etc/kubernetes/admin.conf add to ~/.bashrc or we can simply

copy its config file to $HOME/.kube/config .

- name: create .kube directory

become: yes

become_user: kubeadmin

file:

path: /home/kubeadmin/.kube

state: directory

mode: 0755

- name: copy admin.conf to user's kube config

copy:

src: /etc/kubernetes/admin.conf

dest: /home/kubeadmin/.kube/config

remote_src: yes

owner: kubeadmin

Once this is done, you can run kubectl cluster-info in control1 node.

kubeadmin@kcontrolplane1:~$ kubectl cluster-info

Kubernetes control plane is running at https://172.16.16.100:6443

CoreDNS is running at https://172.16.16.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Note that its entry point is the virtual IP we made using keepalived. Congratulations, you now

have a k8s cluster! Now let us make it highly available.

Before we join more nodes to our control plane, let us see if we can access our first control plane

node using our floating IP. Remember that haproxy pings /healthz endpoint of kubernetes apiserver

to know if it is alive or not? Let us see the response.

First, we need to SSH into one of the load balancer nodes when the virtual IP is alive. Check with

ip a command and look for the existence of 172.16.16.100 ip address.

ssh kubeadmin 172.16.16.51

ip a

Once you are in the right node, simply curl into the control plane apiserver healthiness endpoint

# -k ignore ssl cert, -v is verbose mode

curl -kv https://172.16.16.100:6443/healthz

You will get a 200 HTTP status response.

* Trying 172.16.16.100:6443...

* Connected to 172.16.16.100 (172.16.16.100) port 6443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

* CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.3 (IN), TLS handshake, Encrypted Extensions (8):

* TLSv1.3 (IN), TLS handshake, Request CERT (13):

* TLSv1.3 (IN), TLS handshake, Certificate (11):

* TLSv1.3 (IN), TLS handshake, CERT verify (15):

* TLSv1.3 (IN), TLS handshake, Finished (20):

* TLSv1.3 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.3 (OUT), TLS handshake, Certificate (11):

* TLSv1.3 (OUT), TLS handshake, Finished (20):

* SSL connection using TLSv1.3 / TLS_AES_128_GCM_SHA256

* ALPN, server accepted to use h2

* Server certificate:

* subject: CN=kube-apiserver

* start date: Nov 23 09:53:08 2022 GMT

* expire date: Nov 23 09:55:33 2023 GMT

* issuer: CN=kubernetes

* SSL certificate verify result: unable to get local issuer certificate (20), continuing anyway.

* Using HTTP2, server supports multi-use

* Connection state changed (HTTP/2 confirmed)

* Copying HTTP/2 data in stream buffer to connection buffer after upgrade: len=0

* Using Stream ID: 1 (easy handle 0x55ae57c6fad0)

> GET /healthz HTTP/2

> Host: 172.16.16.100:6443

> user-agent: curl/7.74.0

> accept: */*

>

* TLSv1.3 (IN), TLS handshake, Newsession Ticket (4):

* Connection state changed (MAX_CONCURRENT_STREAMS == 250)!

< HTTP/2 200 <-------------------------------------------------------------- we want 200

< audit-id: ebc57fd0-a58d-4a30-8c2a-5584ee50fd90

< cache-control: no-cache, private

< content-type: text/plain; charset=utf-8

< x-content-type-options: nosniff

< x-kubernetes-pf-flowschema-uid: eb7cafce-8a2e-4dde-900e-ef916d1edc9e

< x-kubernetes-pf-prioritylevel-uid: 60dc2404-44cb-4b46-992b-8561247ff4f1

< content-length: 2

< date: Fri, 02 Dec 2022 06:52:06 GMT

<

* Connection #0 to host 172.16.16.100 left intact

Now that we are successful in pinging apiserver, let us join two more nodes to the control plane. To achieve this, we simply generate both a certificate and a join token.

kubeadm init phase upload-certs --upload-certs (1)

kubeadm token create --print-join-command (2)

Using the information from these two outputs, we join both control2 and control3 nodes to control1.

- hosts: control2,control3

become: yes

tasks:

- debug: msg="{{ hostvars['control1'].cert_command }}"

- debug: var="{{ ansible_eth1.ipv4.address }}"

- name: join control-plane

shell: "{{ hostvars['control1'].join_command }} \

--control-plane \

--certificate-key={{ hostvars['control1'].cert_command }} \

--apiserver-advertise-address={{ ansible_eth1.ipv4.address|default(ansible_all_ipv4_addresses[0]) }} \

>> node_joined.txt"

register: node_joined

{{ hostvars['control1'].join_command }} join comes from (2).

--control-plane indicates that we are joining a control plane.

--certificate-key value is obtained from the new temporary certificate we generated from (1).

--apiserver-advertise-address is important because of the vagrant setup we have. Again, ignore

if using other types of nodes. The command obtains current ipv4 IP address for each control2 and

control3 nodes.

Finally, we copy /etc/kubernetes/admin.conf to $HOME/.kube/config to be able to use the

kubectl command. Run this playbook with

ansible-playbook -u root --key-file "vagrant" 05-control-plane.yaml --extra-vars "@vars.yaml"

On successful join, run kubectl get no to see all nodes. no is short for nodes.

kubeadmin@kcontrolplane1:~$ kubectl get no

NAME STATUS ROLES AGE VERSION

kcontrolplane1 Ready control-plane 82m v1.26.0

kcontrolplane2 Ready control-plane 80m v1.26.0

kcontrolplane3 Ready control-plane 80m v1.26.0

Next task is to join our three worker nodes to the control plane. These worker nodes are where our applications will live. On worker1 node, we run the following playbook.

# 06-workers.yaml

- hosts: control1

become: yes

gather_facts: false

tasks:

- name: get join command

shell: kubeadm token create --print-join-command

register: join_command_raw

- name: set join command

set_fact:

join_command: "{{ join_command_raw.stdout_lines[0] }}"

- hosts: workers

become: yes

tasks:

- name: join cluster

shell: "{{ hostvars['control1'].join_command }} >> node_joined.txt"

args:

chdir: $HOME

creates: node_joined.txt

The kubeadm token create --print-join-command is exactly the same as we have done in the previous

playbook. But here, it is repeated because if in the future we need to add more worker nodes, we

can simply re-run this playbook - it won’t affect current running worker nodes.

Run with

ansible-playbook -u root --key-file "vagrant" 06-worker.yaml

One last thing is we want to be able to use the kubectl command from the host machine. So we copy

/etc/kubernetes/admin.conf from one of the control plane nodes to our host. This is not a

compulsory step because we can already run kubectl commands inside the cluster by first SSH-ing.

The reason we do this is if we want to proxy nginx container so that we can see its output in

a browser in our local computer - which I will show shortly.

ansible-playbook -u root --key-file "vagrant" 07-k8s-config.yaml

Now, you can run kubectl command on the local computer. If you check the nodes, you will see six of

them. Before you can deploy your apps to this cluster, you need to make sure the worker nodes are

ready first which can take about two minutes. What I like to do is to use watch command for

example watch -n 1 kubectl get no.

kubeadmin@kcontrolplane1:~$ kubectl get no

NAME STATUS ROLES AGE VERSION

kcontrolplane1 Ready control-plane 82m v1.26.0

kcontrolplane2 Ready control-plane 80m v1.26.0

kcontrolplane3 Ready control-plane 80m v1.26.0

kworker1 Ready <none> 79m v1.26.0

kworker2 Ready <none> 79m v1.26.0

kworker3 Ready <none> 79m v1.26.0

Congratulations. You now have a highly available k8s cluster running on your machine!

Now to verify that we can deploy something in the k8s cluster, let us try with deploying nginx, and additionally, access from a local machine.

To do that we need to do two things. First, create a deployment. This will download the nginx image and deploy a pod in our cluster. Secondly, we need to expose the port, so we can access the pod.

In local machine, run:

kubectl create deployment nginx-deployment --image=nginx

kubectl expose deployment nginx-deployment --port=80 --target-port=80

Nginx listens on port 80 by default, then we choose an arbitrary target port number, also 80, that we are going to connect.

Now if we check the pods,

kubectl get po

it will show that it is still creating.

$ kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-deployment-5fbdf85c67-jgfzj 0/1 ContainerCreating 0 2s

We can use the watch command like this

watch -n 1 kubectl get po

and now we know the pod is ready.

Every 1.0s: kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-deployment-5fbdf85c67-jgfzj 1/1 Running 0 85s

We can also confirm the IP address of the pods by using the wide option. You will see that it is assigned to the 192.168.0.0/16 subnet for calico which we defined when we initialise the cluster.

kubeadmin@kcontrolplane1:~$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-5fbdf85c67-jgfzj 1/1 Running 0 6m40s 192.168.140.1 kworker3 <none> <none>

There are a number of ways to access our container. When we expose the deployment using kubectl expose deployment

command, it creates a service of type ClusterIP by default.

It is a type of service where we can access a pod from any node, as long as you are within the

k8s cluster. So, you need to SSH into one of the control plane nodes beforehand. For example, let

us check the service.

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 9d

nginx-deployment ClusterIP 10.103.141.186 <none> 80/TCP 6d23h

As you can see, the deployment is assigned an IP of ClusterIP type at 10.103.141.186. So once you

SSH, you can curl the service, and you will get an HTML response from nginx.

ssh kubeadmin@172.16.16.103

curl 10.103.141.186

For now, we are most interested in accessing the nginx default page using a local browser. To do that, we need to perform a port forward. We are port forwarding nginx to another port in local because I already have a local nginx installed. Let us pick an unprivileged port which is between 1024 and 65535, say 8080.

The way we are accessing the pod is by doing a port forward. In production, you would want to look at ingress like metallb or nginx. We will explore that option in part three. For now, a port forward suffices for local access.

$ kubectl port-forward deployment/nginx-deployment 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

Handling connection for 8080

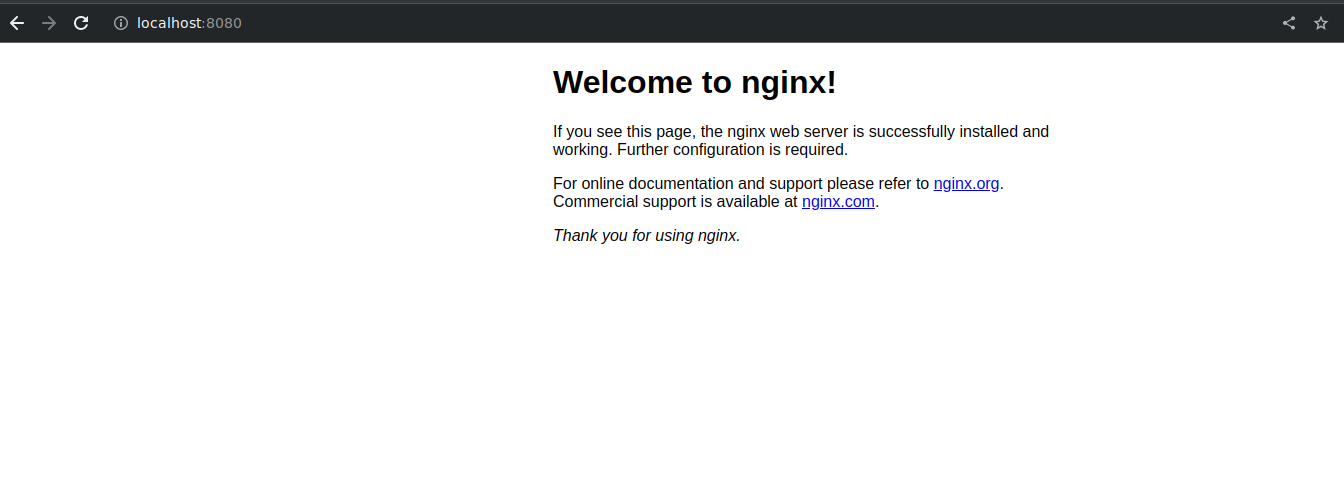

Now go to your browser and access http://localhost:8080, and you will see the default nginx welcome page!

Once you are done playing with the cluster, you can suspend the VMs by running vagrant halt. To

resume, simple run vagrant up again.

Conclusion

It has been a long journey and this post has a lot of emphasise into creating a highly-available k8s

cluster. This post might be long (and complex) but in reality kubeadm has made the process easy by automating many

tedious steps. If you want to dive into more details however, you can install k8s step-by-step using

Kelsey Hightower’s Kubernetes The Hard Way.

Ok, so the question is, is this k8s cluster production-ready? We already have got a highly-available setup. Or is it? We have only installed k8s inside a single computer. What if someone trips the Wi-Fi router cable! So best practice is to have each of the three control plane nodes installed in different availability zones - for example, one node in each of the three data centres in Sydney. That way, if power goes out in one data centre, the other two data centres can pick up the traffic. More details in the documentation. To add more resilience, we can use one node in each of aws, gcp, and azure.

Of course there still a lot more thing to talk about k8s since we have only scratched the surface, and we have only deployed a simple nginx server. What I will do in the next part of the series is to deploy containerised front and back end applications into our cluster, and then do cool stuff like increasing replication and updating application versions and watching how k8s magically do it for us. Visibility into our applications is important as well so, we will look at opentelemetry for observability.